Search This Blog

So you want to garden...with kids? You're not crazy. You just need a little support. Buy our book on Amazon and visit this blog for some extra credit kid's gardening projects.

Posts

Showing posts from 2009

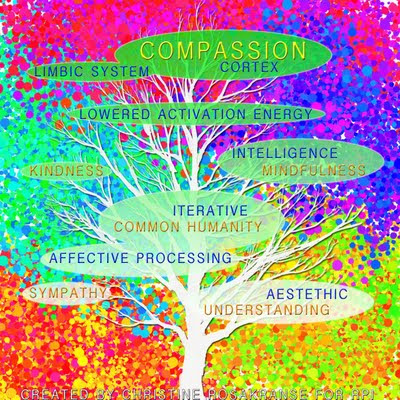

Augmenting Human Compassion: A Conceptual Framework

- Get link

- X

- Other Apps

On OurTube: “Open video” could beget the next great wave in web innovation - if it gets off the ground

- Get link

- X

- Other Apps

New Post at Graduate Blog on Developing Compassion

- Get link

- X

- Other Apps

Douglas Engelbart: Augmenting Human Intellect and Bootstrapping

- Get link

- X

- Other Apps

Remix: On “Open Video in Practice” Technology Review, Vol. 112/No. 5, Sept/Oct 2009 Issue

- Get link

- X

- Other Apps

Developing and promoting your blog: becoming known, respectably

- Get link

- X

- Other Apps

The different theoretical approaches to the concept of presence. What is the best approach?

- Get link

- X

- Other Apps

Nielsen's "Usability" and Some Questions (Part 3/3)

- Get link

- X

- Other Apps

Nielsen's "Usability" and Some Questions (Part 2/3)

- Get link

- X

- Other Apps

Nielsen's "Usability" and Some Questions (Part 1/3)

- Get link

- X

- Other Apps